Yuan-Hong Liao

Toronto, Canada

![]() I am on the job market for an industry role starting mid 2025. If my research aligns with your needs, please feel free to reach out via email.

I am on the job market for an industry role starting mid 2025. If my research aligns with your needs, please feel free to reach out via email.

I am a final-year Ph.D. student at the University of Toronto and Vector Institute. I am fortunate to be supervised by Prof. Sanja Fidler. Previously, I was an CV/ML scientist intern at NVIDIA Toronto AI lab in 2022 - 2023 and Amazon Astros team in 2024.

My research surrounds two essential aspects: visual data labeling and vision-language models:

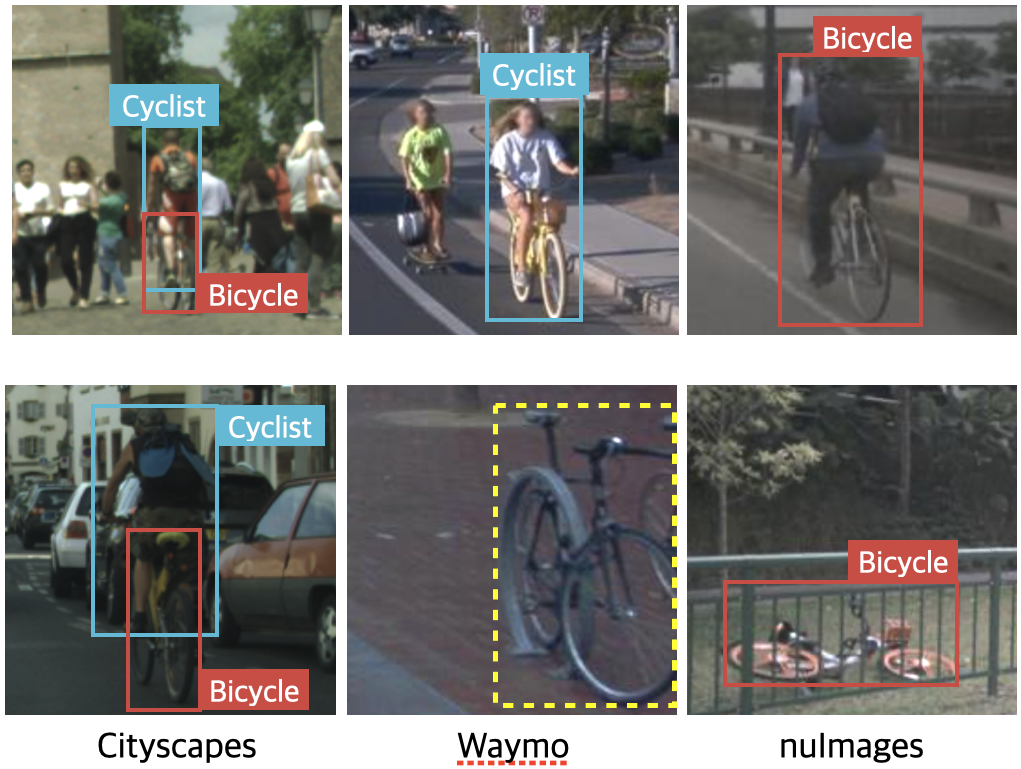

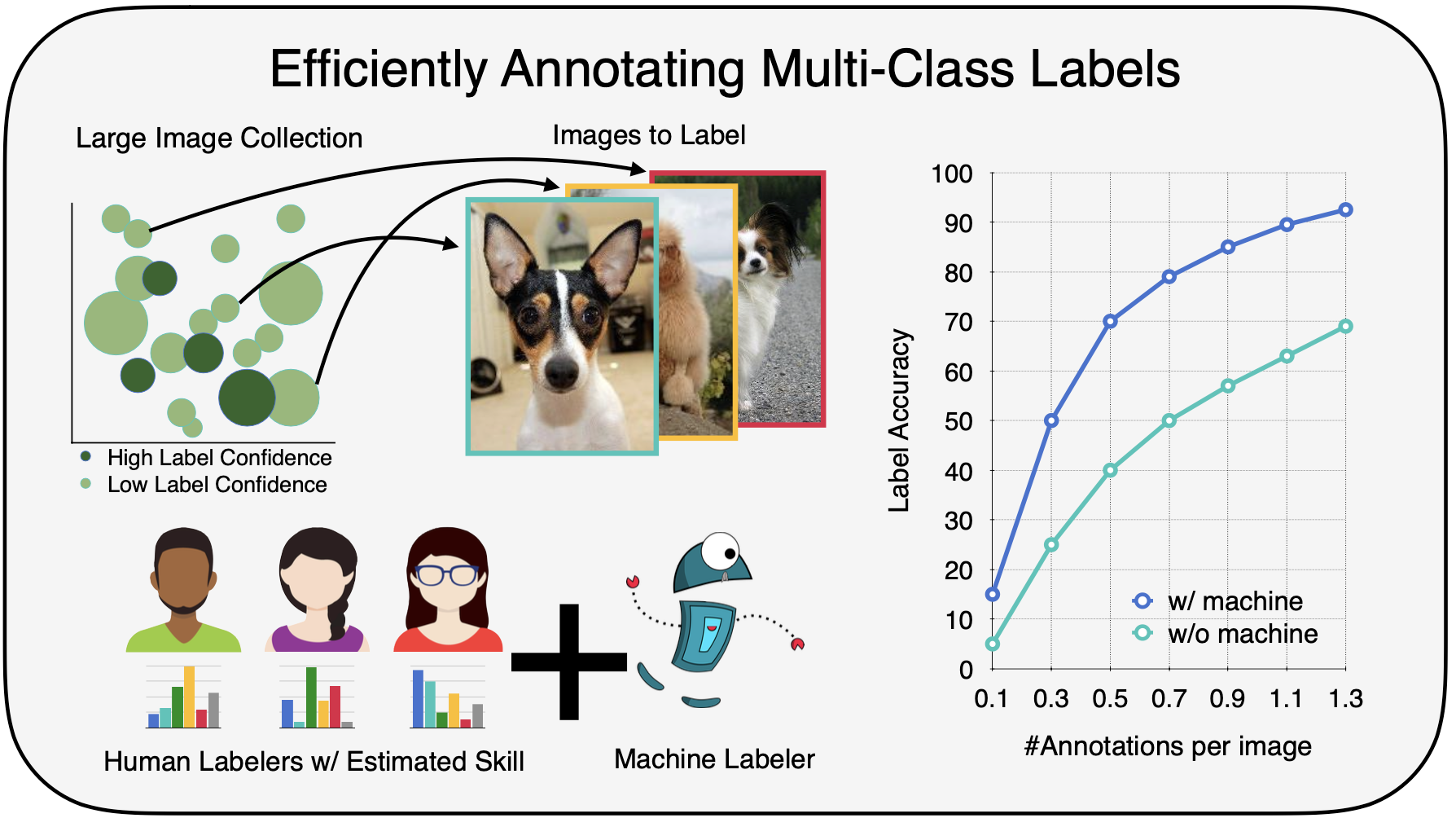

- 🖼️ Improving visual labels: Visual labeling: I develop methods to reduce crowdsourced labeling costs [CVPR’21] and fix semantic inconsistencies in real-world datasets [ICLR’24].

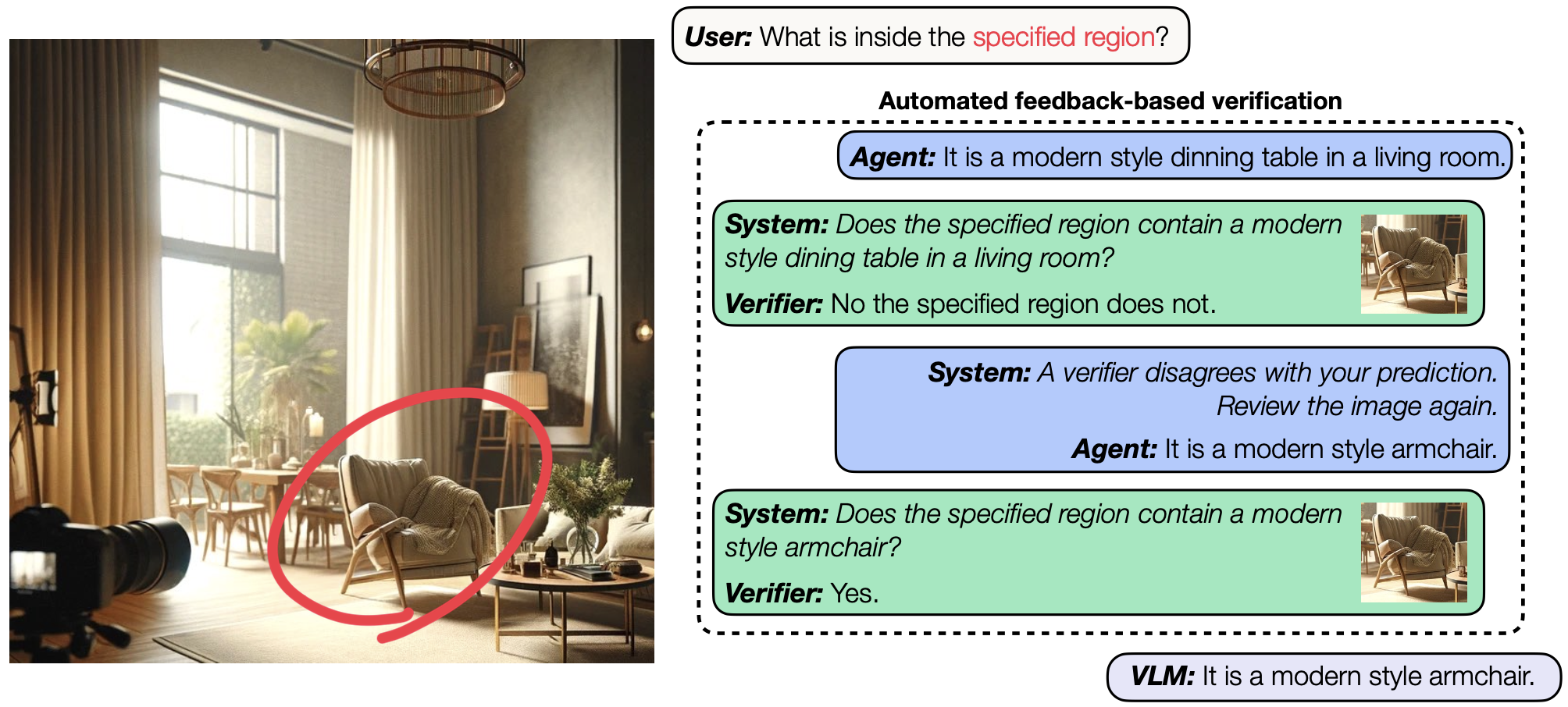

- 🧠 Vision-language models: I enhance vision-language models in spatial reasoning [EMNLP’24], enable self-correction during inference [CVPR’25], and promote system-2 thinking in vision-centric tasks [arxiv’25]

Check my resume here (last updated in April 2025)

Previous experiences

Prior to my Ph.D., I was a visiting student at Vector Institute and USC in 2018 and 2017, respectively. I was fortunate to start by AI research at National Tsing Hua University, supervised by Prof. Min Sun.news

| Jul 08, 2025 | |

|---|---|

| May 21, 2025 | |

| Apr 23, 2025 | |

| Feb 26, 2025 | |

| Sep 20, 2024 | |